Assessment at the Campus Level

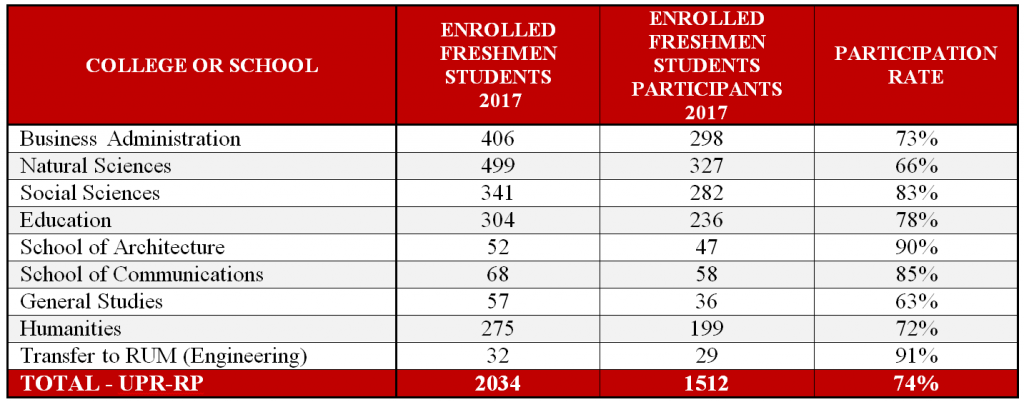

In order to uniformly measure the assessment of student learning outcomes to a sample of students from different academic programs of Colleges and Schools, assessment instruments were designed by professors who are experts in each area and validated with a small representative sample. The assessment of undergraduate student learning at this level focused on the following outcomes: (1) logical-mathematical reasoning, (2) effective communication in Spanish, (2) effective communication in English, (3) information literacy, and (5) critical thinking skills in progress.

- Logical Mathematical Reasoning

Logical Mathematical Reasoning

Entry Level Test

The committee in charge of developing the Logical Mathematical Reasoning Test was formed by an inter- facultative group of professors and OEAE personnel. The participating professors belong to six colleges or schools (Business Administration, Architecture, Natural Sciences, Social Sciences, General Studies and Education).

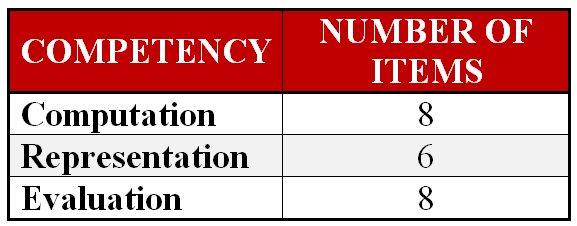

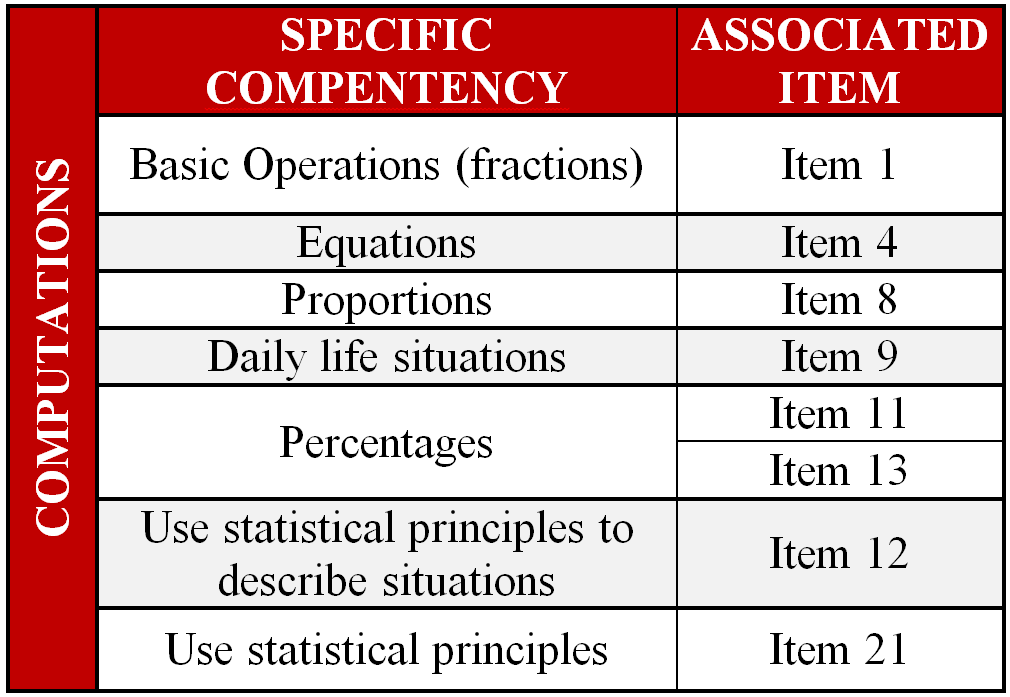

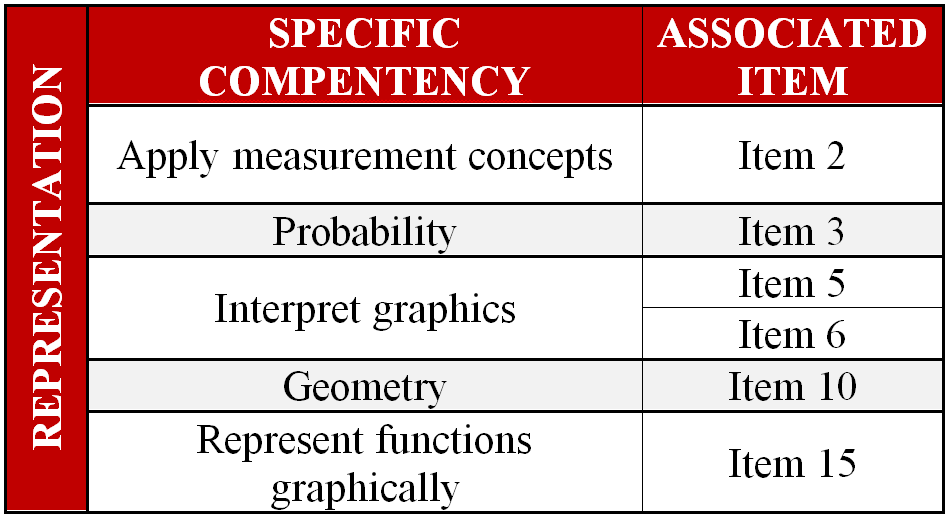

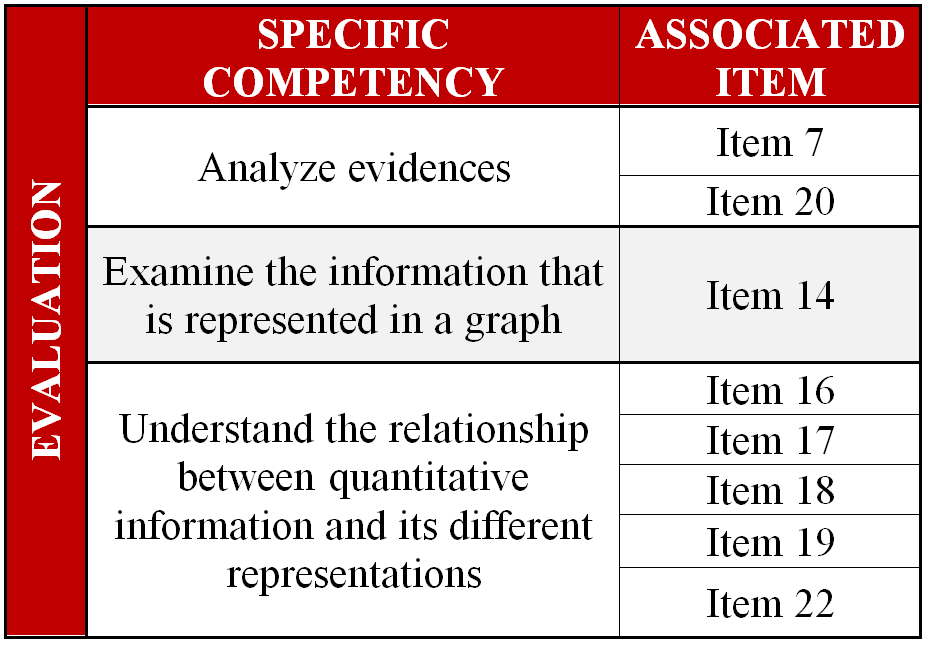

The test aims to assess the learning outcome of Logical Mathematical Reasoning in three competency areas: computations, representation and evaluation. Each one of the twenty- two items within the test measures one of the three competency areas.

Description of each competency area:

ComputationUnderstanding and using arithmetic, algebra and statistics to resolve problems.RepresentationComprehend and interpret mathematical models, which are represented through equations, graphs and charts to make inferences based on them and solve problems.EvaluationCritically think about the usage of quantitative information.The Logical Mathematical Reasoning Test consisted of 22 items. The following table presents the number of items by competency area.

The following tables present the specific competencies according to their competency area.

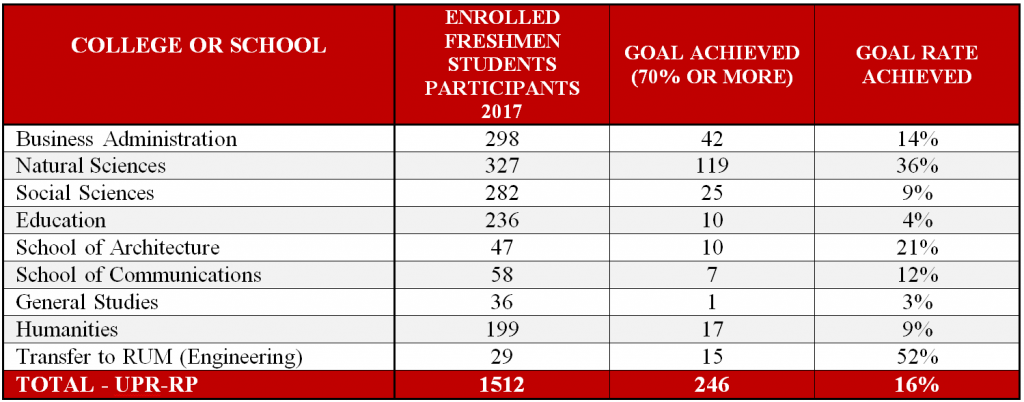

The expected outcome was that all students reach 70% or more in each of the competency areas (computation, representation and evaluation) and in the totality of the test.

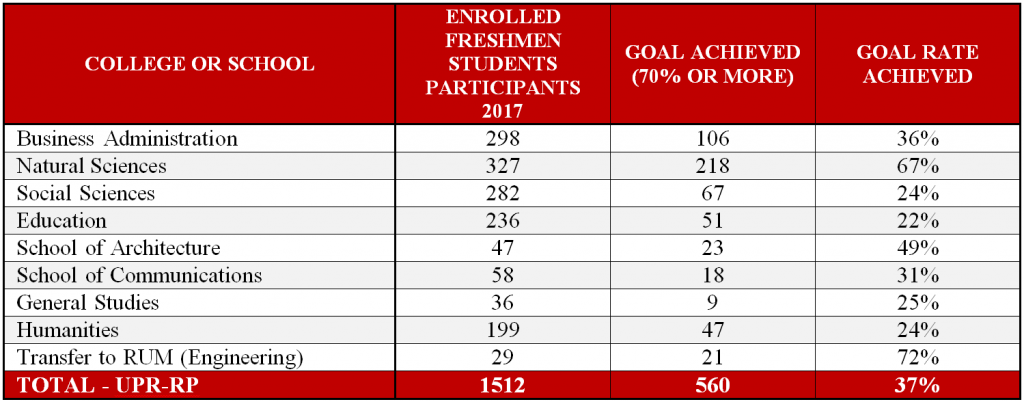

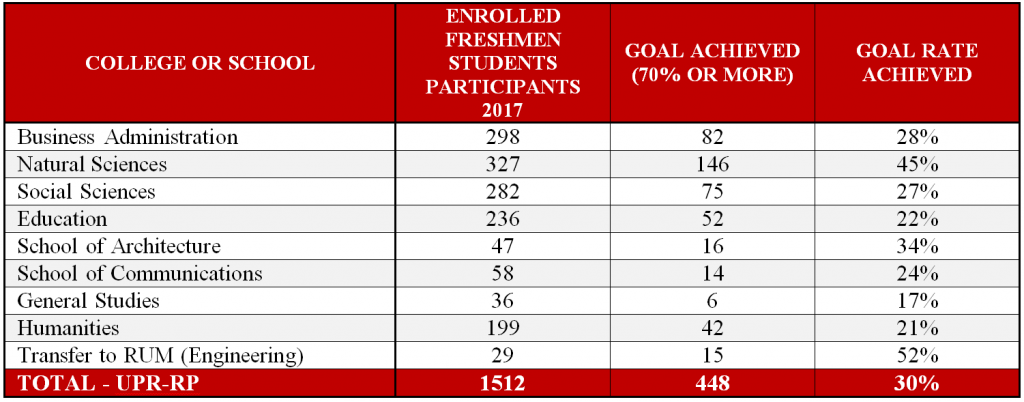

Achieved Goal of 70% or more in the Logical Mathematical Reasoning Test - Competence Area: Computations

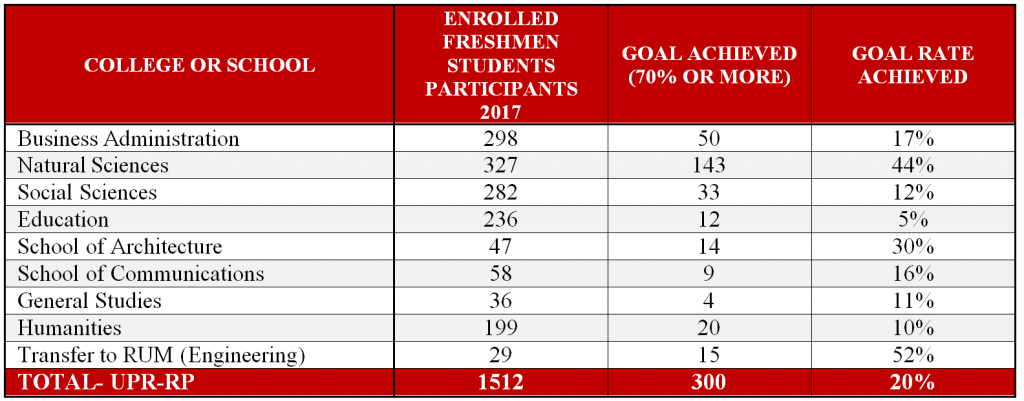

Achieved Goal of 70% or more in the Logical Mathematical Reasoning Test - Competence Area: Representation

Achieved Goal of 70% or more in the Logical Mathematical Reasoning Test - Competence Area: Evaluation

Achieved Goal of 70% or more in the Logical Mathematical Reasoning Test - All Areas of Competence - General

The complete institutional report 2017 can be accessed through the following link:

To access the results of the Logical Mathematical Reasoning Test given at entry level to freshmen students 2017 by college or school, click on the links shown below:

General Level Test

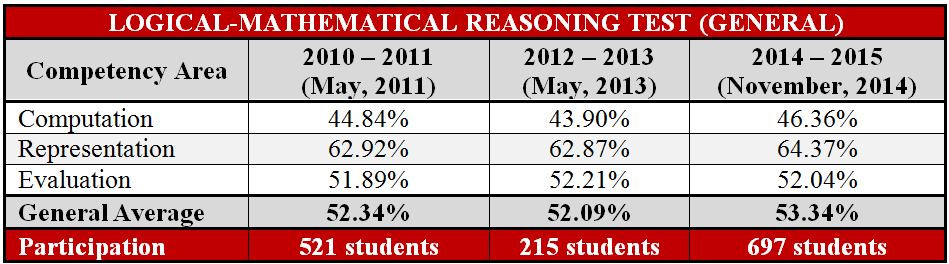

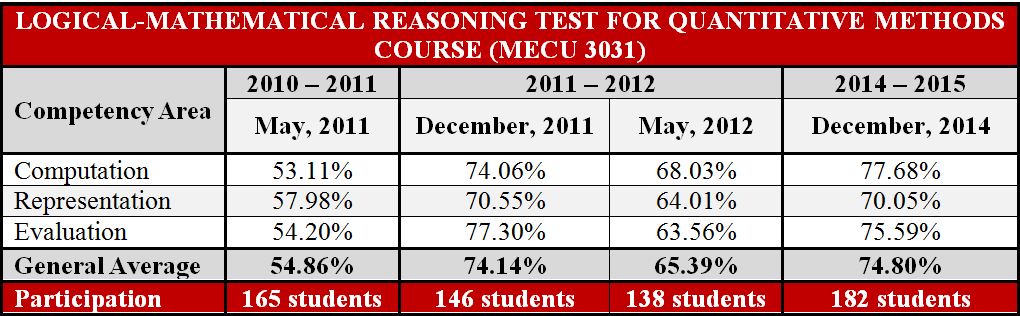

A test designed by a committee of experts to assess logical-mathematical reasoning skills was validated and administered to a series of sections of math courses in which students that are not from the Colleges of Business Administration and Natural Sciences enroll to comply with the skills for this general education component of the Bachelor's degree. This test was administered for the first time in May 2011, and again in May 2013 and November 2014. Additional measures geared to course modifications and tutor training were worked on to strengthen student learning in this learning outcome. A tutoring system was implemented, coordinated by an experienced professor, to improve students’ learning needs in this outcome. Discussions on how to improve teaching and learning in this area are currently underway. The OEAE personnel met with the Director of the Mathematics Department and with the Department Assessment Coordinator to discuss the need to design a learning experience geared to reinforce the logical-mathematical reasoning skills in the students.

A similar test was administered to students from the Business Administration College who enrolled in the Pre-Calculus course (Quantitative Methods Course ) as a requisite to comply with the general education logical mathematical reasoning component. The following table presents results by area of competency and compares them in each instance in which a similar test was administered in the Quantitative Methods course (MECU).

- Effective Written Communication (Spanish)

Effective Written Communication (Spanish)

Entry Level Test

The complete institutional report 0f 2017 can be accessed through the following link:

To access the results of the Effective Written Communication Test (Spanish) given at entry level to freshmen students 2017 by college or school, click on the links shown below:

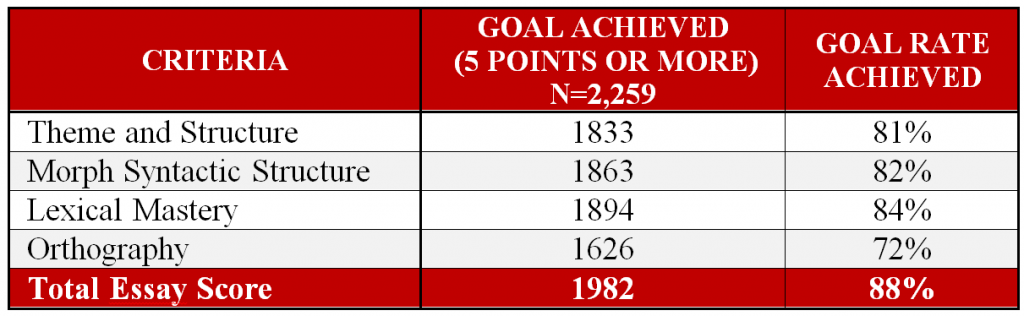

A fourth institutional test in Effective Written Communication Skills in Spanish, similar to the one administered in August 2012 (but with a rubric that assessed each criterion on a scale of 1-8 through four different levels) was planned and designed by Spanish professors from the UPR-RP who are experts in the Spanish language. It was administered to the incoming 2015-16 class on August 6, 2015 on the day that the Institution schedules the analysis of course registration for the upcoming academic semester. A total of 2,259 students (77% of the incoming freshmen class) took the test. The theme, structure, and orthography criteria need special attention. Assessment results will be sent to the students and to the Spanish Departments of the Colleges of General Studies and Humanities to address the areas of concern in their courses. The following table presents the number of students who obtained 5 points or more in each criterion assessed and in the overall test.

Group Performance by Criteria in the Effective Written Communication Test (Spanish) Administered in August 2015 to Freshmen Students (N=2,259)

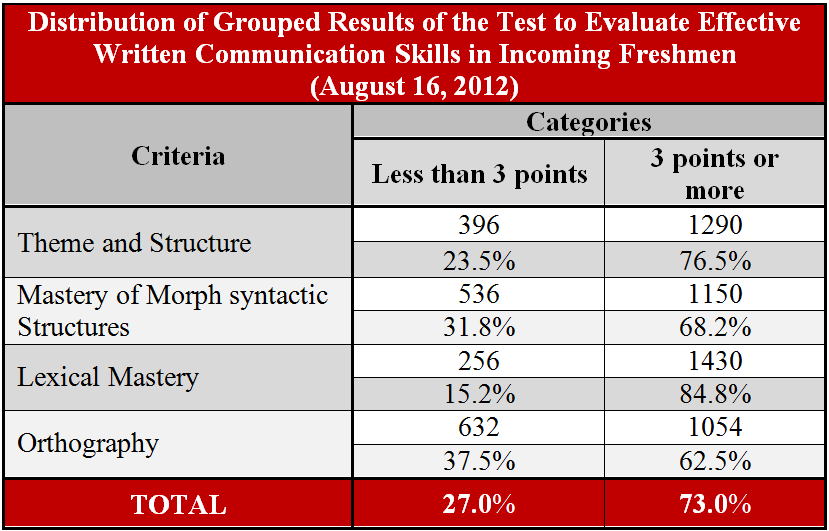

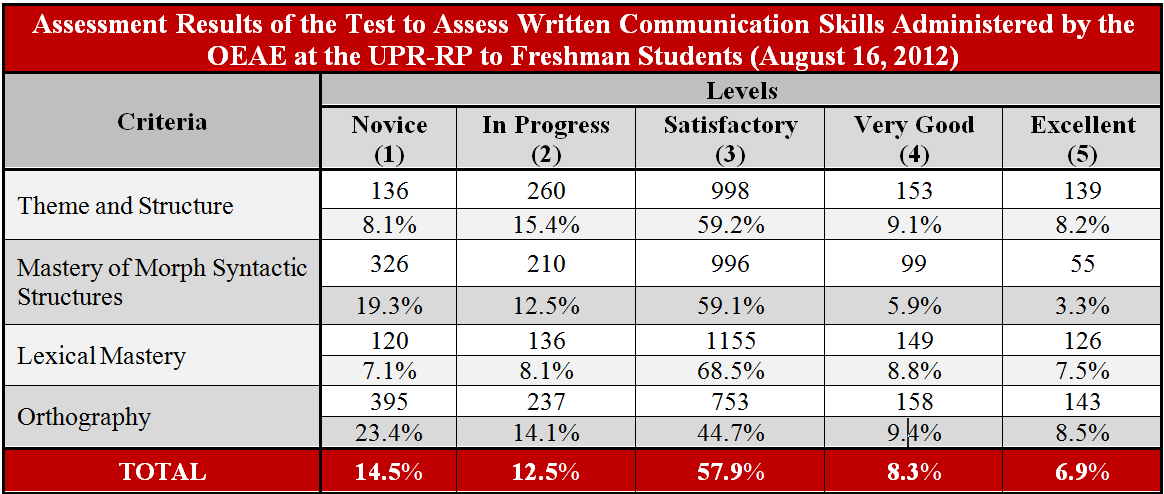

A similar test given in 2012 was assessing the same criteria with a different rubric in which it was expected that 70 % of the students assessed would obtain an expected outcome of 3.0 points or more in each test criteria in the 5.0 scale rubric used. Although the average performance percentage of all areas was higher than 70 %, two of the assessed criteria (Morphosyntactic Structures Mastery and Orthography) are areas that need to be improved through transformative actions geared to reinforce these skills. The following table presents grouped results by the number of students assessed who obtained 3 or more points in the rubric used.

During the academic year 2011-12, OEAE personnel planned the second instance of institutional assessment of student learning for effective written communication in Spanish. Spanish professors from UPR-RP, experts in Spanish language, designed a similar test. Unlike the previous initiatives in this area, the UPR-RP was in charge of all aspects of this effort. This dramatically increased cost effectiveness. A total of 1,686 students, 80% of the incoming freshmen class, took the test on August 16, 2012.

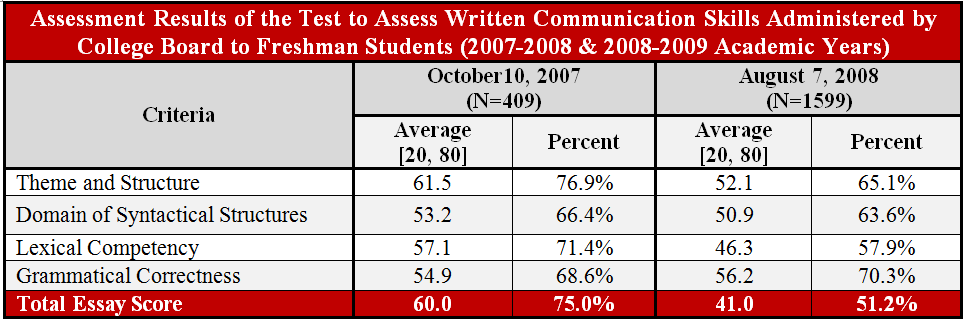

The OEAE evaluated the writing competencies of the 2007-08 incoming class, a milestone campus‐wide effort. For this project, a writing test was designed and administered by the College Board to students enrolled in the first nine academic programs approved under the restructured undergraduate degrees (i.e., Physics, Mathematics, General Science, Biology, Journalism and Information, Audiovisual Communication, Public Relations and Advertising, Fine Arts, and Interdisciplinary Studies). A total of 409 newly accepted students (58% of the total who enrolled in the aforementioned programs) participated. The areas assessed were: theme and structure, lexical competency, syntactical structures mastery, and grammatical correctness. Collaborative efforts with the College Board continued in 2008‐09. A writing test was administered to a sample of 1,604 newly admitted students (82% of those accepted under new undergraduate degree requirements).

The following table presents results from the competency areas assessed in the test in the 2007 and 2008 academic years. It should be noted that students assessed in 2007 are from academic programs with higher entrance academic indexes.

Exit Level Test

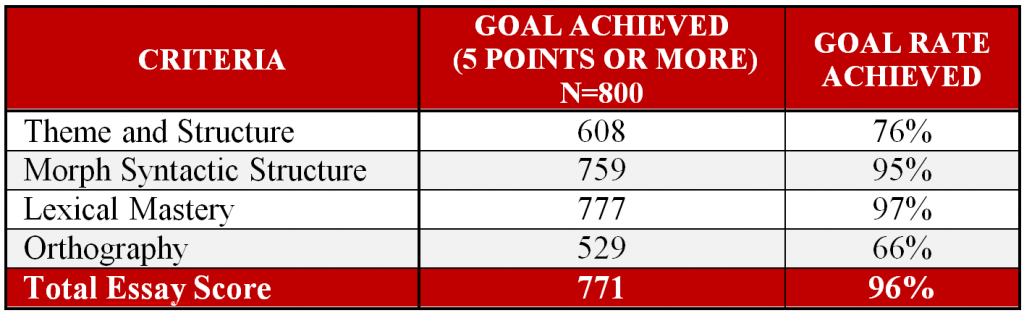

During the second semester of the 2014-15 academic year, the OEAE administered a test to a sample of 800 students near the end of their undergraduate degrees. This was done in order to assess their effective Spanish communication skills in advanced courses as an exit measure and to obtain information to be used in establishing what areas tend to improve over the course of the degree. The following table presents the number of students who obtained 5 points or more in each criterion assessed and in the overall test.

Group Performance by Criteria in the Effective Written Communication Test (Spanish) Administered in August 2015 to a Sample of Students Near Completion of their Undergraduate Degrees (N=800)

College of Education - Results of the Spanish Writing Skills Test - Exit Level (April 2015)

College of General Studies - Results of the Spanish Writing Skills Test - Exit Level (April 2015)

College of Humanities - Results of the Spanish Writing Skills Test - Exit Level (April 2015)

College of Natural Sciences - Results of the Spanish Writing Skills Test - Exit Level (April 2015)

College of Social Sciences - Results of the Spanish Writing Skills Test - Exit Level (April 2015)

School of Architecture - Results of the Spanish Writing Skills Test - Exit Level (April 2015)

School of Communication - Results of the Spanish Writing Skills Test - Exit Level (April 2015)

- Effective Written Communication (English)

Effective Written Communication (English)

Entry Level Test

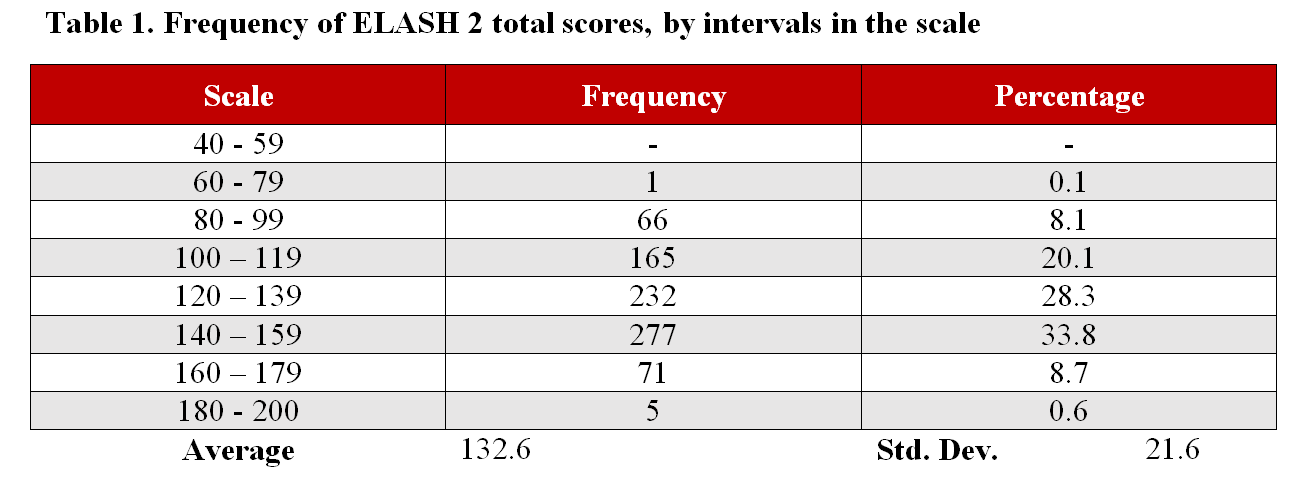

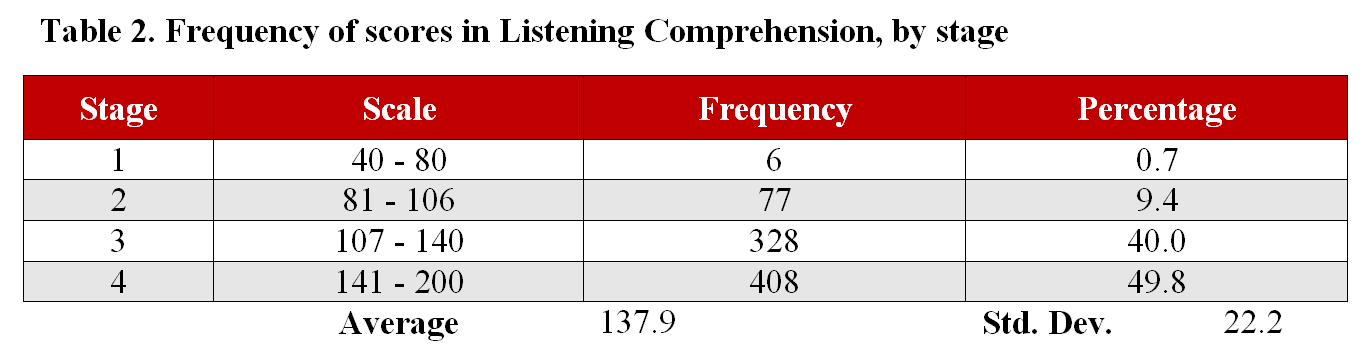

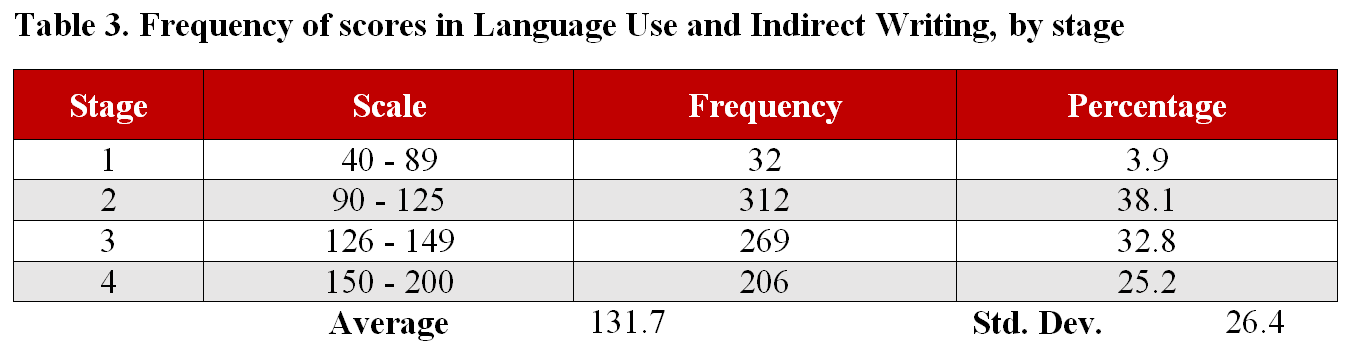

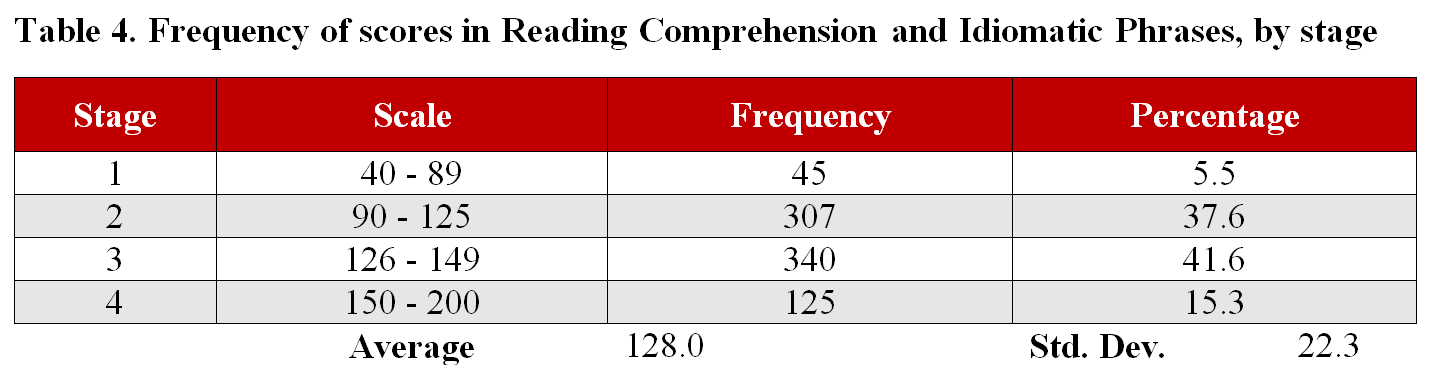

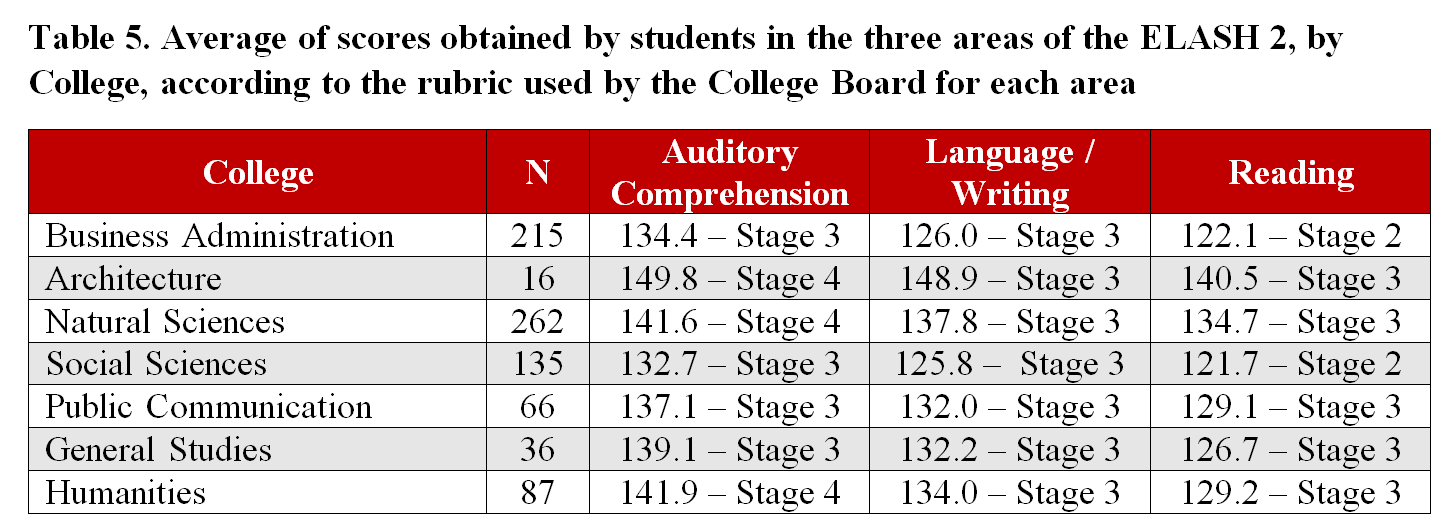

As part of institutional efforts to assess writing skills in English, and in coordination with the College Board, an English Language Assessment Test (ELASH II‐English Language Assessment System for Hispanics II) was administered to a sample of 819 newly admitted students in the first semester of the academic year 2008‐09. The test was administered on August 7, 2008; the same day after the students finished the effective communication Spanish test. The following four tables represent the number of students by intervals in the scale. The ELASH II test evaluates the following skills: Listening Comprehension, Language Use and Indirect Writing, and Reading Comprehension and Idiomatic Phrases. The scores for each skill were categorized according to four levels: advanced (stage 4), high intermediate (stage 3), low intermediate (stage 2), and novice (stage 1).

The following table presents the average scores obtained by the students in each area assessed in the ELASH 2 test by College or School

Most of the students participating from the different Colleges and Schools scored between the advanced and high intermediate levels in each skill assessed as can be seen in the following tables (scores by skill, scores by College or School for each level.

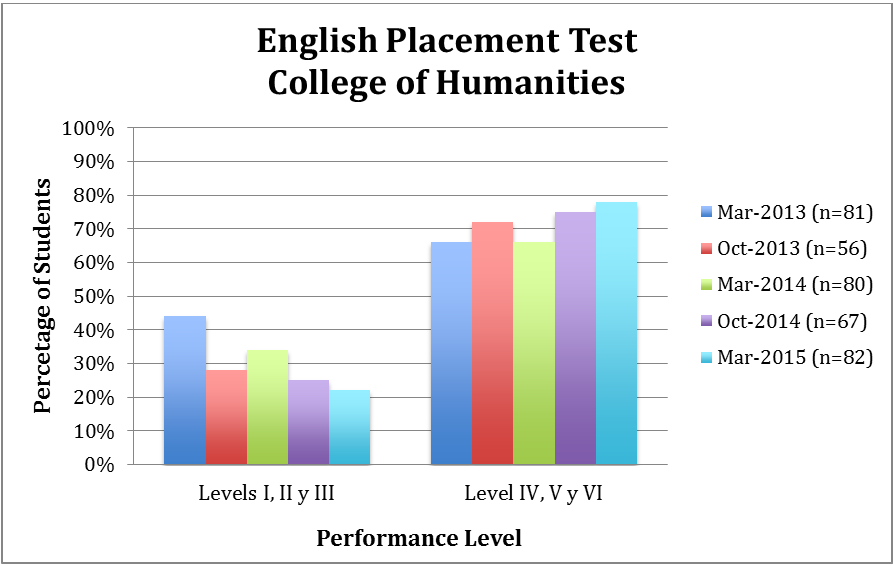

At the same time, the English Department of the College of Humanities started an assessment process in Oral Communication Competencies for students who were taking English as a Second Language (ESL) in fulfillment of their second year English requirements. Rubrics were developed for use in the second semester of the 2009-10 academic year. These actions provided important information because they identified aspects of language fluency specific to the courses in question. Results of the test facilitated the placement of students in the appropriate sections according to their performance level. The Humanities English Placement Test (HEPT) has played a primary role in the evaluation and placement of our undergraduate students into the most appropriate levels of competency. This placement test is offered twice per academic year and focuses on the evaluations of skills such as written and oral comprehension and composition. Analysis of the test results in the last two and a half academic years indicated that most of these students who took the College of Humanities English Department Placement Test are classified in the highest level courses. The next figure represents this result from March 2003 to March 2015.

- Information Literacy

Information Literacy

An operational definition for these competencies adapted from Association of College and Research Libraries (ACRL) was established and learning objectives were designed for the initial and developmental levels. A series of workshops for faculty training in the assessment of these competencies was organized. These workshops, which were sponsored by various colleges and schools, focused on writing course syllabi learning objectives, selecting appropriate learning activities, and designing an assessment rubric.

The assessment of student learning outcomes in the area of information literacy is most evident in specific campus projects that target students in specific schools and colleges. The UPR-RP Library System has provided much of the support and vision needed for these initiatives. Four are highlighted: 1) the Information Literacy and Research Program; 2) the Pilot Program for Distance Education; 3) a project for the Integration of Information Literacy to Curriculum (PICIC for its Spanish acronym); 4) the Natural Sciences Information Literacy Project.

1. The Information Literacy and Research Program

Based in the Architecture Library, this program started in 2009 and caters to students and professors of the School of Architecture, at both the undergraduate and graduate levels. It led to the establishment of five instructional modules for the development of information literacy competencies, as established by ACRL/ALA guidelines. In addition, librarians offered workshops, conferences, and individual consultations on: identification of research topics, strategies for identifying and obtaining information, criteria for evaluating information, academic honesty and plagiarism, professional style manuals, preparing theses and end-of-degree projects.

2. Pilot Program for Distance Education

This program has two instructional designers that support professors in the School of Architecture in creating distance education courses and helping produce teaching and assessment tools. Special attention is given to the assessment of information literacy.

3. Project for the Integration of Information Literacy to Curriculum (PCIC Project)

Three librarians from the UPR-RP Library System participated in several tracks of the ACRL Information Literacy Immersion Program (i.e., teaching track 2009, assessment track 2010, and teaching with technology track 2013) as preparation for launching the PICIC Project. The project utilizes the “assessment as learning” philosophy developed by Alverno College. Three UPR-RP Colleges participated in the project: Business Administration, General Studies, and Education.

In the 2012-13 academic year the College of Business Administration trained 60% of its undergraduates and 73% of its graduate students enrollment. The College of General Studies trained 100% of its undergraduates, and 93% of the faculty integrated information literacy into their syllabi and course content. These colleges adopted a common set of information literacy learning objectives, as approved by the campus committees (Appendix 5.9). As some familiar with this project have pointed out, development of a standardized assessment instrument would facilitate making useful comparisons across colleges.

4. Natural Sciences Information Literacy Project

The Natural Sciences Information Literacy Project strives to ensure that students understand the importance of learning and mastering information literacy skills in ways that complement knowledge and skills in their major area and the learning objectives at the institutional level. They were incorporated into the course syllabi along with the learning objectives of the course material. The description of the activities used to assess these competencies and the learning objectives of said activities were also included.

For the first cycle, two exercises were developed for General Biology and General Chemistry labs, both in line with ACRL standards for academic programs in science, engineering, and technology. For the first exercise, which focused on the analysis of the parts of a scientific article, the expected outcome was that 70% of the students achieve a score of 70% or more. The first semester that this exercise was implemented, a total 83% of students achieved the expected score. However, problems were noted in students’ responses to questions relating to reference formats. The following semester, as a transformative action, students were provided with online resources that can be used in learning how to cite scientific articles. The amount of students that reached the expected outcome that year increased to 92%, but problems related to understanding citation persisted among those who did not.

For the second exercise, students completed a semester-long project that culminated with a written paper and an oral presentation. The expected outcome for this exercise was that 65% of the students would obtain a score of “good” or “excellent.” The expected outcome was achieved by 63% of students. The main difficulties for students who scored in the lower ranges were: evaluation of the trustworthiness of the sources used, consistency between the references cited and the bibliography, and formatting errors in the bibliography. To provide students with additional support, librarians’ presentations on information literacy were modified. In addition, librarians have worked with assessment coordinators to formulate more effective transformative actions in the classroom.

Updated by Arlene Fontánez on August 10, 2018